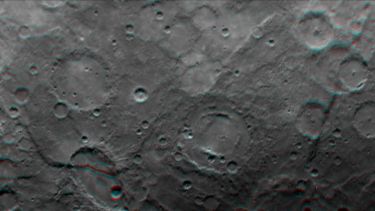

The film shows breathtaking footage captured by the European Space Agency (ESA)/ Japan Aerospace Exploration Agency (JAXA) in its third flyby of Mercury in what is Europe’s first mission to the closest planet to the sun.

The ESA commissioned the acclaimed artist to compose a fitting soundtrack for the flybys, with the latest on offering a rare glimpse of Mercury’s night side.

±ı≥¢ƒÄ composed the music for the remarkable sequence with the assistance of the Artificial Musical Intelligence (AMI) tool developed by the University‚Äôs Machine Intelligence for Musical Audio (MIMA) research group.

Dr Ning Ma, from the Department of Computer Science, explains how the technology works: “AMI uses AI to discover patterns in musical structures such as melodies, chords and rhythm from tens of thousands of musical compositions.

“It encodes music data in a way that is similar to reading a music score, enabling the technology to better capture musical structures. The learning of musical structures is enhanced by adding phrasing embeddings - expressive nuances and rhythmic patterns captured numerically - at different time scales. As a result, AMI is able to generate compositions for various musical instruments and different musical styles with a coherent structure.”

±ı≥¢ƒÄ, MIMA collaborator and Creative Director of sonic branding agency Maison Mercury Jones, composed the music for the first two Mercury flyby movies with artist Ingmar Kamalagharan. The two compositions formed the basis of the third.

‚ÄúWe wanted to know what would happen if we fed AMI the ingredients from the first two compositions,‚Äù said ±ı≥¢ƒÄ.

“This gave us the seeds for the new composition which we then carefully selected, edited and weaved together with new elements.

“Using this technology almost acts as a metaphor for the Mercury mission; there's a sense of excitement but there's also trepidation, much like with AI.”

For ±ı≥¢ƒÄ, the early adoption of AI in their music follows a career of breaking boundaries.

‚ÄúI‚Äôve had a lifelong fascination with technology and consciousness, particularly what makes us human,‚Äù ±ı≥¢ƒÄ continued.

“So the idea of AI in general and the possibilities it raises have fascinated me ever since I watched the film Metropolis as a child.

“I’ve always been into music technology and in some ways generative composition, like with AMI, feels like an almost natural progression.

“For me there are two strands to it - one is the questioning of our usefulness as human creators, and this can create a lot of fear among artists. There’s also another fear at play - the fear of missing out or being left behind.

“I’m finding that the more you work with this type of technology, the more it can teach you. In that sense I’m interested in what it can teach us about ourselves as human creators. I think it’s one of those things that once we see what it can do, it changes things forever.

“It's a little bit like when humans first saw the waveform. As soon as you see that representation of an audio wave, you can't unsee it and it changes how you hear and experience music. I think we're at the dawn of that with AI as well.”

From a user perspective, AMI is an assistive compositional tool. The user interface allows composers/ creators to upload a MIDI file to provide a starting point for a new composition. AMI will then generate new musical material, based on this musical seed. Composers can adjust musical parameters such as instrumentation, musical mode, and metre through to more abstract qualities such as ‘adventurousness’.

‚ÄúA lot of the time people's reaction initially is: ‚ÄòIsn't using AI cutting corners and making life easy for yourself?‚Äô‚Äù ±ı≥¢ƒÄ added.

“In reality it takes longer. It takes more time because one of the things that you come up against is sort of choice paralysis, because you have dozens, if not hundreds more options for every single juncture. A lot of composition is about making choices. With AMI you make every decision, but with a wider range of possibilities at your disposal.”

Professor Guy Brown, Head of the University‚Äôs Department of Computer Science, said: ‚ÄúWe really value the collaborative research that we are doing with ±ı≥¢ƒÄ, and it‚Äôs so gratifying to see our AI tools being used to make such beautiful music. Our goal is very much to work with musicians to develop AI systems that support and extend their creative endeavours, rather than replace them at the touch of a button. ±ı≥¢ƒÄ‚Äôs music for the BepiColombo mission is a perfect example of human composer and AI system working in perfect harmony.‚Äù

Professor Nicola Dibben, from the University‚Äôs Department of Music, said: ‚ÄúAI is about to have a huge impact on music making and dissemination. We‚Äôre proud to be working with ±ı≥¢ƒÄ to understand the implications of AI music generation and to create a future for Music AI together which is fair and inclusive.‚Äù